How NetApp IT Uses NPS in its Data Fabric

Elias Biescas: Today presenting, we have Matt Brown, who is the Executive Program Director of NetApp on NetApp. And with him we also have Kamal Vyas, Chief Cloud Architect for NetApp IT. So together, they’re going to present on what NPS is, NetApp Private Storage, and how it benefits NetApp internally. And we hope that this presentation actually helps our partners identify opportunities with this solution. So with that, I’m going to ask Matt and Kamal to introduce themselves, and please go ahead and present to us. Thank you.

Matt Brown: Sure. Excellent. Well, good morning, good afternoon, everybody. It’s a pleasure to speak with you today. Just very briefly, a little background on me. I’ve been with NetApp for 20 years. I’ve been in IT for close to 35 years now. So what I’ll be sharing today is our experience in the Cloud and how that storage really has created a very unique capability for our environment. I’m going to let Kamal introduce himself, and then I’ll come back and I’ll begin our presentation. Kamal?

Kamal Vyas: Thanks, Matt. Hello, everyone. Thank you for taking time out to join today’s webinar. I am Kamal Vyas, Enterprise Cloud Architect for NetApp IT. I’ve been with NetApp for 13 years now, and the last five years I’ve been leading our Cloud and DevOps strategy here at NetApp IT. As I said, we are part of NetApp IT, so we are not part of any other product sales or marketing team. So we don’t get, of course, any commission on any of these products. But also our perspective is of IT practice and what works for a particular environment, and that’s the type of stories we share. And today I will be covering why and how we use NPS in our Cloud architecture, so looking forward to a good discussion there.

Matt: Excellent. Well, thank you very much, Kamal. So one of the things, before we start off, I want to give you a little bit broader background. NetApp IT, like most of our IT shops, most of our pure organizations, they’ve been going through a lot of changes. With this background of digital transformation, the demands of the business are greater now than ever. The expectations of our customers are greater now than ever.

Our Challenge

Matt: People want solutions, they want broader options in the marketplace, they want capabilities that their own data center could ever provide before, and those pose a lot of unique challenges to most IT shops. We have a lot of concerns; at the end of the day, it is IT or CIO that’s responsible for the integrity of our data. And it’s the data that’s primarily mostly in demand. Capabilities depend on data. And really, being able to create an architecture that allows an IT shop to leverage new resources, new capabilities, is paramount to our existence and to our company’s ability to thrive in the marketplace.

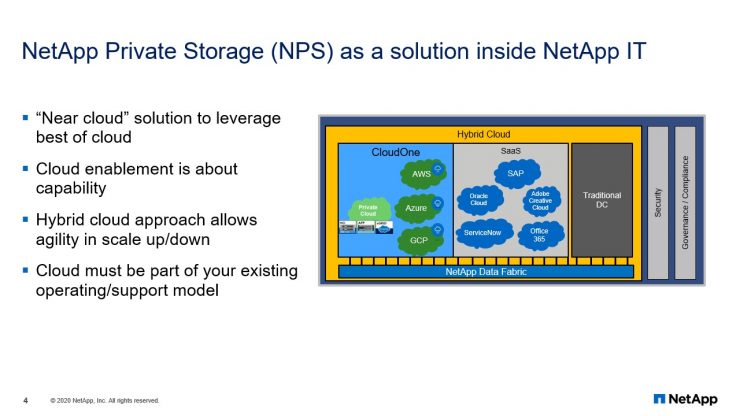

So before we start talking about a product or an architecture, NetApp Private Storage is an architecture. It’s not a SKU. It is a collective set of resources that are built near a cloud. So before I go and really get into that piece, let’s start off about what our problem to solve is. And it always, always is about the why. Why do I need to change things? Why do I need to develop a new solution set? We’re going to be focused specifically on NetApp, but I’m sure that most of you who are working in IT, or even our salespeople are selling into IT, and we realize that understanding the whys, our motivation, it is very important.

Let’s talk about what NetApp was five years ago. We were a hardware company, and our challenge was that when you deliver an engineered hardware solution, it takes time. In our case, it was taking between two and two and a half years. And in today’s world, going to market every two and a half years with a new solution probably isn’t going to work. I mean, come on. It wasn’t working. The other piece is, is that, like all of us, we want new capabilities and the cloud offers a tremendous opportunity for new capabilities, and the challenge is, how do you get there? In a more traditional path is you go all-in to a cloud, but I’m a big believer that that’s very limiting. You need the best of cloud, and we’ll talk about that in a bit.

Also, in our more traditional view, we were very limited on our ability to enable innovation. You only have a finite set of resources in the data center. Unfortunately, a lot of innovation is not officially approved. I mean, that’s why it’s innovation. People try to figure out what to do next. And so innovation was hampered by our own on-prem offerings. And while we’re looking out in the world and looking at cloud, and like I referred to before, going all-in on one cloud initially has a lot of concerns. I think a lot of them have been solved, especially on the security side. But being able to go and leverage the cloud in a way that we have access to our data and have abilities to enable our data with new capabilities was a primary concern for us. And really, while we’re all comfortable managing that on-prem, moving to the cloud does cause a lot of different angsts. What if I have to access that data through integration? Am I going to have to move that data to a different cloud? So all those things became considerations and collectively our challenge with really moving our IT shop forward and really enabling not only IT but our business to access new resources and new capabilities to thrive in the marketplace.

Our Requirements of “the Cloud”

Matt: So with our challenges, we did realize that the cloud was probably the best place to look. It offers a tremendous set of capabilities that we could never have on-prem. And, as I mentioned before, one cloud solution really didn’t fit our requirements. Today, I need extensively in compute, I need access to analytics, I need to run some services as services through SaaS. I mean, I think we’re all looking at machine learning and AI. And if you talk about that as a collective set of resources, there isn’t one cloud that really does all three the best.

And let’s make another point here. The clouds are trying to compete against each other, which means there’s going to be continuing innovation out there. So we were interested in a more “Best of Cloud” solution. The ability to take advantage of any and all resources out there and to do it in a way that allowed us to not just to scale today, but maybe scale into the future. There’s no question that there’ll be continued offerings in the cloud, and we want to be able to leverage those new opportunities as they come in the future.

One of the primary things that we’re interested in, because costs are always a concern, when you look at your on-prem environment, we’re always under utilizing our resources because we have to build to peak. The cloud really offers our ability to scale up and scale down those resources and maintain a core, private data center with day-to-day resources, to really run lean and mean, and run as efficiently as possible. And then last and certainly not least, the ability to control and access and manage your data, but also expose your data to new resources has to be paramount again in really looking at a future solution that really enables our IT shops in ways that were just never before possible.

NetApp Private Storage as a Solution Inside NetApp IT

Matt: So with our challenges, we did realize that the cloud was probably the best place to look. It offers a tremendous set of capabilities that we could never have on-prem. And, as I mentioned before, one cloud solution really didn’t fit our requirements. Today, I need extensively in compute, I need access to analytics, I need to run some services as services through SaaS. I mean, I think we’re all looking at machine learning and AI. And if you talk about that as a collective set of resources, there isn’t one cloud that really does all three the best.

And let’s make another point here. The clouds are trying to compete against each other, which means there’s going to be continuing innovation out there. So we were interested in a more “Best of Cloud” solution. The ability to take advantage of any and all resources out there and to do it in a way that allowed us to not just to scale today, but maybe scale into the future. There’s no question that there’ll be continued offerings in the cloud, and we want to be able to leverage those new opportunities as they come in the future.

One of the primary things that we’re interested in, because costs are always a concern, when you look at your on-prem environment, we’re always under utilizing our resources because we have to build to peak. The cloud really offers our ability to scale up and scale down those resources and maintain a core, private data center with day-to-day resources, to really run lean and mean, and run as efficiently as possible. And then last and certainly not least, the ability to control and access and manage your data, but also expose your data to new resources has to be paramount again in really looking at a future solution that really enables our IT shops in ways that were just never before possible.

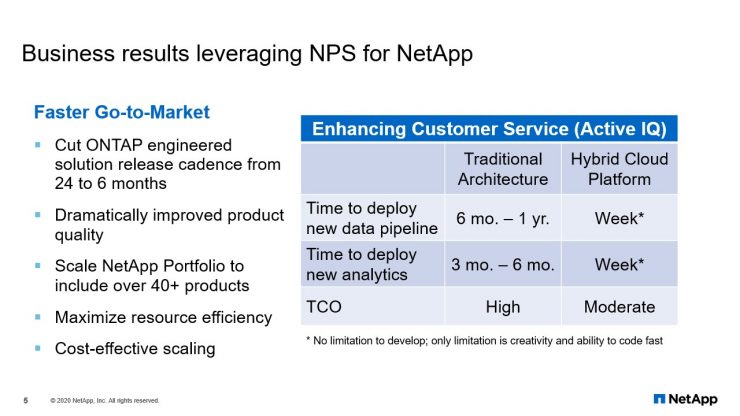

Business Results Leveraging NPS for NetApp

Matt: So with our challenges, we did realize that the cloud was probably the best place to look. It offers a tremendous set of capabilities that we could never have on-prem. And, as I mentioned before, one cloud solution really didn’t fit our requirements. Today, I need extensively in compute, I need access to analytics, I need to run some services as services through SaaS. I mean, I think we’re all looking at machine learning and AI. And if you talk about that as a collective set of resources, there isn’t one cloud that really does all three the best.

And let’s make another point here. The clouds are trying to compete against each other, which means there’s going to be continuing innovation out there. So we were interested in a more “Best of Cloud” solution. The ability to take advantage of any and all resources out there and to do it in a way that allowed us to not just to scale today, but maybe scale into the future. There’s no question that there’ll be continued offerings in the cloud, and we want to be able to leverage those new opportunities as they come in the future.

One of the primary things that we’re interested in, because costs are always a concern, when you look at your on-prem environment, we’re always under utilizing our resources because we have to build to peak. The cloud really offers our ability to scale up and scale down those resources and maintain a core, private data center with day-to-day resources, to really run lean and mean, and run as efficiently as possible. And then last and certainly not least, the ability to control and access and manage your data, but also expose your data to new resources has to be paramount again in really looking at a future solution that really enables our IT shops in ways that were just never before possible.

How Data Fabric Works with NetApp Private Storage

Kamal: Thank you, Matt. Let’s look behind the scenes, at what NPS lower-level architecture is and what benefits we get. As Matt touched upon, NPS is a key foundational block of our cloud architecture. We have been using it for almost four years now. And being a huge fan of NetApp IT, 80% plus of our current cloud workloads leverage this NPS solution today. In the next three slides we’ll go over how NPS fits in our cloud architecture and what benefits it provides. So with that, let’s get started.

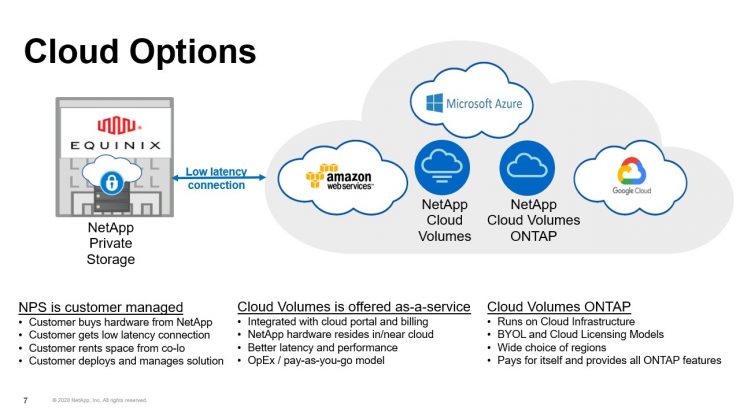

Cloud Options

Kamal: I wanted to do a quick compare and contrast of the storage offerings NetApp has in cloud. Customers have a lot of choices today in terms of what storage offering they want to get from NetApp. So on the left what I’m showing is NetApp Private Storage, NPS. This is a fully customer-managed solution. It’s like bringing your own storage to the cloud, or near the cloud. In this, you would essentially buy a NetApp gear and install it in a colo, like Equinix as Matt pointed out, and connect it with low-latency connections.

Customers will have full control of the data. And also on the side, you have full responsibility of the life-cycle management of the solution. There are no restrictions in terms of what regions and what cloud providers you can build this with. Anywhere you have a colo and a low-latency connection, this can be turned up in the region.

Now, this is what we will focus on today, but just to say what the other options are: you also have NetApp Cloud Volumes, which is offered as-a-service in major cloud providers today. This offering, and NetApp has installed and integrated NetApp gear in or close to major cloud providers environments, can be consumed and built directly through the cloud provider. So you really don’t have to buy any colo space or equipment, without any contract or relationship with NetApp. Just directly build through the cloud provider. It is available in all major regions but not as wide as NPS would be. And the NetApp team is working hard to bring up new regions ever quarter or so, and those are published on our website.

The key difference for Cloud Volumes compared to NPS is, in Equinix, the data is in a customer’s control. But on Cloud Volumes, the data would be in NetApp’s control. NetApp would have control of the data. But on the flip side, if you use Cloud Volumes, you really don’t have to worry about life-cycle management of the solution, and it’s a full pay-as-you-go OpEx model, with patching and upgrades all handled behind the scenes by NetApp. So based on what your customers want, either control of the data or pay-as-you-go model, I think they have choices and options in the cloud.

The third one, is NetApp Cloud Volumes ONTAP. So that’s the one which Matt was pointing to. Now we have a software-only offering, which can run on any cloud provider which offers VM. And in the back end it uses the infrastructure of the cloud provider. In the case of NetApp Private Storage in Cloud Volumes, it runs on NetApp actual hardware. But for NetApp Cloud Volumes the key difference is it runs on the Cloud providers’ infrastructure. But at the same time, it provides you all the ONTAP capabilities and features, like SnapMirror, dedupe, and other things what Matt already touched upon. This offering can also be enabled in any region so there’s a wide choice of regions available. And you have choice in terms of do you want to go buy, bring your own license, buy through NetApp and bring it to the Cloud provider, or just rent it from marketplace in on any of these cloud providers.

In NetApp IT, we leveraged all three options, but for today’s webinar we’ll be focusing on Equinix. I just wanted to make sure you understand what choices are available in terms of cloud storage options from NetApp before we jump into that one.

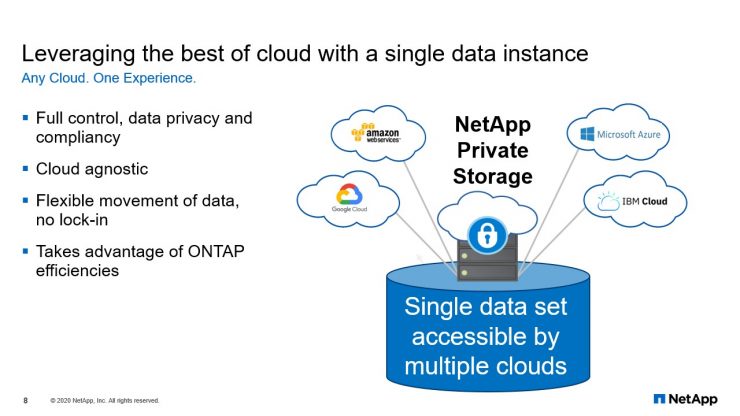

Leveraging the best of cloud with a single data instance

Kamal: So as we said, we are huge fans of NPS and 80% of our workloads today leverage NPS as a solution. We have a multi-hybrid cloud environment, which we maintain. And we’ve been running it for four years. We leverage all major cloud providers, Amazon, Microsoft Azure, and Google. But for all three of them, the storage portion is always on NetApp Private Storage. We really don’t use any of the storage from the cloud providers. So what does that buy us?

Essentially what we do is we choose our location very strategically where we want to build NPS. Ideally in a region where you have multiple cloud providers available and you have a colo, which provides you a ready low-latency connection to all these cloud providers. That way the latency between the compute and the storage is sub-millisecond. And for most cases we target it to be under two millisecond for our deployments.

So what does this type of environment buy? There’s some upfront work we have to do in terms of setting up of the colo and connectivity, but at the end of the day, we have full control of our data at all times. And as Matt touched upon, data is key. Essentially data is everything your company is. Computes and VMs are all replaceable, but if you have the data, you can take it anywhere and build your entire IT infrastructure or company from scratch.

Our compliancy and privacy are handled just like how we handle it in our data centers. So for example, if companies have strict compliancy needs like GDPR in Germany or in Europe and you want to make sure your data resides in certain geographical boundaries, those kind of use cases can deployed using NetApp Private Storage. You have full control of how you want to handle the data at rest and in transit, so you can encrypt the minimum amount of time the data goes to the cloud provider, or all the time when the data sits in this particular data set.

Our audit processes, like in terms of our auditors, like how they check and process our audits for on-prem workloads, the same process is now applied to cloud workloads as well. It doesn’t have to change. So that itself is a big relief for auditors and they really don’t have to worry about dissecting how storage or data is handled in the cloud-provided environment. So if you have a customer like anybody who’s in the tight regulatory compliance-type organization or industry, banking, healthcare, government, this itself is a huge selling point for them, to have full control of their data at all times.

This also helps us be cloud agnostic. As no data resides in the cloud provider, we can easily move between the clouds. So let’s say you have an application running in Amazon, now for some reason, for price or features or whatever the reason you want to move it on Azure, the migration is very easy because the data never moves. Data has gravity, and moving data is always hard. When you take data out of the cloud providers’ environment, they would charge you a lot of money and time. So in this case, we would just have the build app on Cloud B, connect to the same data set, and make a DNS chain and we can move the application from Cloud A to Cloud B. So no lock-ins. In case if we want better pricing or better features, this type of architectures in NetApp IT helps us do those kind of use cases. And use truly what Matt was referring to, Best of Cloud.

As we said, when we started we were with Amazon, and as Google and Microsoft came up, there a lot of features like Google Analytics and AI and ML, moving those workloads from Cloud A to Cloud B is very easy with this kind of architecture. We have essentially no lock-in and FEUs, any of these features.

Also we get all the Enterprise Plus efficiencies our ONTAP provides. For example, by turning features like thin provisioning and dedupe in our internal setup like in NPS, we almost need 60% less storage. If this storage was living in the cloud providers environment, we would be needing more than double the capacity than we have provisioned in NPS.

So all the innovation we have done on ONTAP in the last 20 years, now it’s available for me to use for my cloud workloads too. Imagine that 60% less, like my bill would be more than double. Of course, cloud is more expensive than ONTAP, but it would be more than doubled if I were to use the full capacity of that storage from cloud providers.

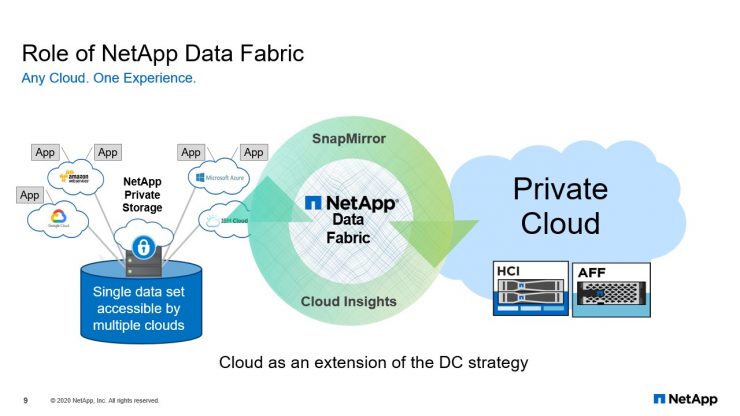

Role of NetApp Data Fabric

Kamal: And you can also extend this architecture by connecting NPS to the on-prem data center. So if NetApp has the best data mover, I’m sure you guys are all familiar with SnapMirror, and if you combine that SnapMirror with NPS, customers can create a true hybrid, multi-cloud data fabric.

For example, one of the key strategic initiatives we have in NetApp IT is to leverage public cloud for all DR workloads. So with SnapMirror, we can synchronize data between our private cloud and the public cloud, and we can have the primary applications running on-prem and DR instance of that running on cloud.

So with this, we really don’t need to invest any more money in buying DR hardware. If you look at DR hardware, it’s essentially the least-used hardware in your data center. For example, it just gets used once every three years, or three months, like when you’re doing a DR test, or once every five, ten years when you actually want to payload an application. But with our NPS strategy, we can keep the data replicated to the cloud and only bring up the compute in the cloud provider for the hours or days we really need it and just pay rent.

We don’t have to run three-year depreciated cycle on that dead hardware running in our data centers. And this has helped us tremendously, to repurpose rows and rows of DR hardware we had in our data centers. We are moving all those workloads to the cloud and we are extending the life of our data centers by many years by that type of architecture.

By the way, this doesn’t just apply to DR. Any of your temporary workloads, for example, DevTest, Sandbox, you really don’t have to maintain that extra capacity or that variable capacity in your private cloud. With data fabric and SnapMirror NPS, you could move all of those temporary workloads to public cloud and rent them versus buying them and using it momentarily for a month and a years or whatnot.

This architecture also helps with cloud migration. Moving application from on-prem to cloud, we really don’t have to change the application architecture. If you’re using Snapshots and NFS or certain hierarchy of volumes, now the app can move as-is to the cloud without trying to fit that app into a blob storage or EBS or S3. So essentially it fields your cloud migrations. Migration time becomes a lot less. Also, downtime, because SnapMirror will keep the data replicated, is a lot less. And you can really enhance the speed at which cloud migration goes with this kind of architecture.

All the tools we use and all the personnel we have for on-prem storage management, like storage architects, are the same right now managing the storage in the public cloud. We really don’t have to invest in buying new tools or getting new resources to now manage our cloud workload. So if you combine all of these, like how much we save by using enterprise-plus features, and the DR repurposing of the data centers and tools and personnel we use, this architecture has helped us tremendously reduce that total cost of ownership when it comes to our cloud storage.

And truly, cloud has become an extension of our data center strategy to the point where we are now eliminating a lot of our data center regions and trying to move a bunch of workloads over to the cloud and use it to provide not only just resource perspective but also provide economic advantages to us in terms of cost efficiency and things like that.

Q: In the circle, there was Cloud Insights mentioned, the actual service. Can you maybe explain a little bit how you use that?

Kamal: Yeah, yeah. Definitely. And that’s the point I was saying is, we really don’t have to invest in any of the new tools. So Cloud Insights is our SaaS offering. I’m sure a lot of customers, if they have heard, we used to have OCI, On-Command Insight, as the options which on-prem offering, which customers can buy and install in their data centers. And it has lot of value points it would give you in managing your storage solution. Now, the new offering is Cloud Insight, which you can buy as a SaaS offering from NetApp, and point and manage not only just your private cloud workloads but also your public cloud workloads directly through this portal in SaaS. And it gives you all realtime information into your IOPS needs, your usage of your hardware, what kind of efficiencies you’re gaining, what kind of features you can turn on to get the best out of your NetApp investments and things like that. So if you haven’t looked into that, I think we can definitely do a webinar just on that and the benefits it provides. That’s our Cloud-based SaaS offering for managing all of our NetApp technology, plus more. It just not only works on NetApp technology. Any storage gear or any compute gear also can be managed using Cloud Insights.

Elias: It’s hardware agnostic, I’m guessing.

Kamal: Yes, it is.

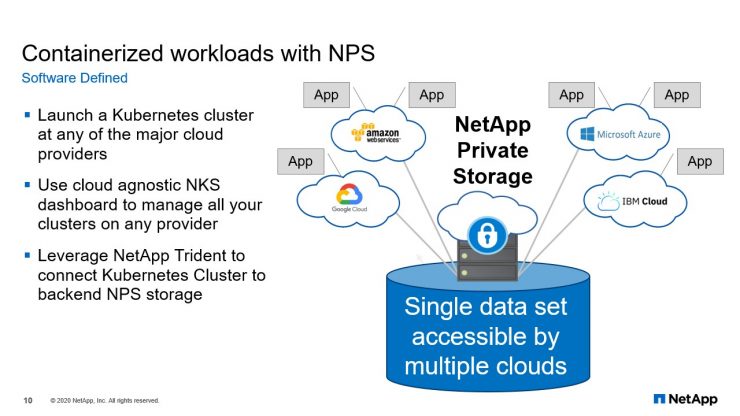

Containerized workloads with NPS

Kamal: This architecture also supports containerized workloads. For example, if you have Kubernetes clusters running in any of your cloud providers, NetApp has also an opensource Kubernetes plug-in called Trident. You can deploy those on your Kubernetes clusters and use that to connect back to NPS, and also leverage on top of the backend storage for you in contrived applications. All the benefits and features we talked about now also can be extended to containerized workloads as well. So not just VMs or infrastructure service, NPS provides a great value for containerized customers as well.

That summarizes the reasons or the benefits we get from perspective using NPS as architecture. And hopefully it gives you some insight into, not just from the perspective of what NPS is, but what are the use cases and options customer have, which this kind of architecture can help them deliver.

Q: Do you use each of the public clouds or have a preferred platform?

Kamal: Yeah, good question. When we started this journey four years back, our preferred cloud provider, our only cloud provider, which was close to providing services, was Amazon. And that still remains our most heavily used cloud in NetApp IT’s environment. But then, over time, there are services beyond infrastructure, so there’s no reason for choosing A, B, or C cloud for if you’re just using VMs and load balancers and whatnot. Their pricing and their regions and geographies are pretty much same for our use cases unless you are in a special geography. But if you want to use any advanced features like AI, ML, or any of the other advanced features for Microsoft like their DevOps pipelines and whatnot, those are the special use cases where we have ventured into other cloud providers. Amazon still remains the most heavily used, because it just had a headstart. All of them three, in terms of IaaS, have the same capabilities, and if you go beyond that, then they have all their own nation specializations we try to use for.

Q: Are you looking at any other Cloud providers at the moment?

Kamal: No, no. I think that’s pretty much it for now. We don’t have any for the radar next, one year. Or at least two, I would say. Things change fast in this environment but as far as I can see, no.

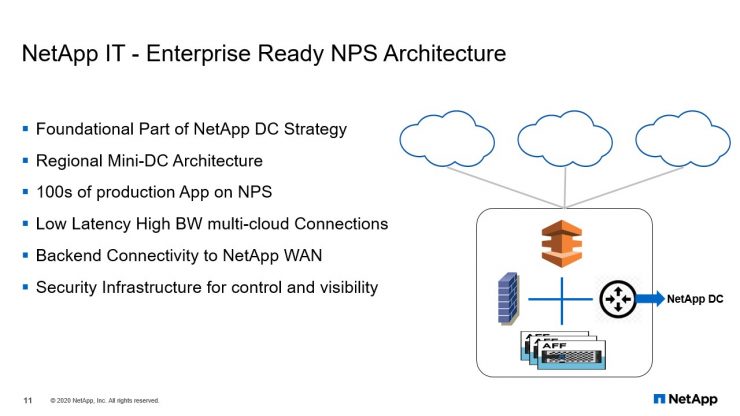

NetApp IT – Enterprise Ready NPS Architecture

Kamal: We looked at the benefits, so now how do you architect an NPS solution? Architecture can be simple. Like in some of our regions, we essentially just have NPS connected through a direct link to the cloud provider. But in some cases it could be, a real enterprise-use case. Let’s say in regions where you want to completely replace your data center, you could have a complete mini-data center-type design over there, especially as your cloud footprint grows, you could build that to be a true enterprise-grade environment.

So NPS’s foundational block for our cloud adoption, as I said, and for advanced architecture in some regions, we strategically choose the location of the colo. Like one where we want to be, from a geography perspective, which provides us the best latency from our user population and where they are, and also it would have some millisecond latency to multiple cloud providers. In those kind of deployments, we don’t just put storage and direct connections in that colo. But it’s a complete mini-data center design architecture. It has routers connecting back to that data center for Snap data and replications so data can be replicated, workloads can be moved between clouds, it even has firewalls to provide control and visibility as what traffic can go between clouds and to our data centers.

And if you are familiar with the concept in a cloud, it’s our full-fledged transit VPC, where the only difference is that it not only supports one cloud, like if you were to build a transit VPC in an Amazon environment, it would be good to do that between multiple VPCs in that environment. But this one is a true multi-cloud transit VPC, and really, this is our control point and visibility point for anything which goes in between inter-clouds or cloud to our data centers, we can have full control and visibility applied through the firewalls who are there. This foundation can scale to support a lot of applications. We already have hundreds of production apps leveraging this architecture today, and it can go even beyond, just as fast as we can migrate.

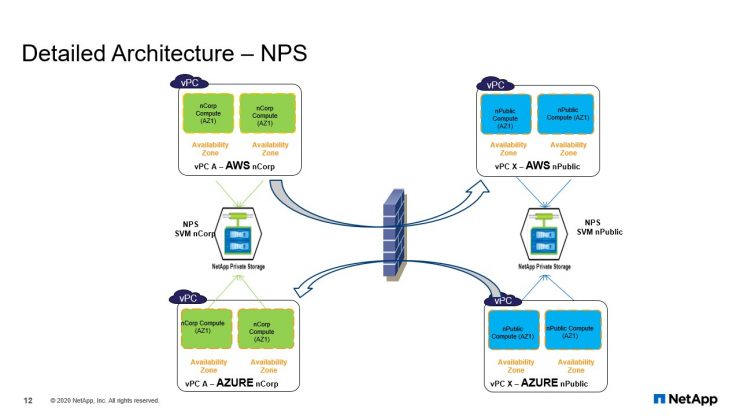

Detailed Architecture – NPS

Kamal: Here’s a closer look under the hood. And this could be used for multiple-use cases, like if you have multiple customers or if you have multi-tenantcy needs for, let’s say, your business unit 1 and business unit 2 and you have multiple clouds. What we are showing in this top portion for example, is built in Amazon. You have DNZ workloads on the right side which are public facing, and then you have internal work source on the left side. You could have your own reasons to have VPC, like, as I said, business unit or different customers. And below, we have Azure, which has similar architectures, which have now the same business units running on the backside, too.

So now, the in-corp customers can use the same NetApp Private Storage. And if you know NetApp features, we also support software-defined logical partitioning like SVM. So what we do in our environment is we create an SVM on top of that common NPS for each of those customers.

So irrespective of all the clouds we are in like Amazon, Azure, or Google, from all of those places, they have free access to connect to that SVM, which is created in that common data set or the common NPS we have for all the cloud providers. And all we do that for multiple customers we have. So imagine, I’m just showing two, but we have lots and lots of these VPCs on different clouds for different partitioning and logical separation reasons. But now if they want to go between those VPCs, let’s say an in-corp customer wants to talk to in-public customer or between those zones, then they go through the firewall in between. So we can have full control and visibility into who can talk to what and what can be allowed and permitted. It’s the same exact hardware, just now it will be supporting multiple clouds but also carved into software-defined partitions for VPC and low-level separation needs, segmentation needs. So the idea here is a true multi-tenant environment and at the same time full security enabled with software-defined features like VLAN extensions and storage virtual machines what NetApp provides.

Q: With NPS, can compute remain on hardware run in the data center? Are there egress costs to consider when migrating to the Cloud?

Kamal: Good question. If you have NPS, can compute remain on hardware running in the data center? You could, well, it’s essentially just like NetApp storage in your data center. So when we call it NPS, essentially when you use it near the cloud with compute from the cloud provider’s environment. But if you want to use the same technology on-prem, then just regular NetApp storage, which you can definitely run in your private data centers, too.

On the second part of the question, there are definitely egress costs you have to consider because a NetApp Private Storage connects as an external entity out of the cloud provider’s environment, you will have charges for data which exits the cloud and goes toward NetApp Private Storage. So essentially, if you have an application which is reading the data from NetApp Private Storage, there’s no charge from that. But now if you are writing from back to the NetApp Private Storage, for those, you would have to pay charges. And if you have connections like direct connect and whatnot, it’s a fraction of your Amazon bill. So for us, we do those kind of calculations on a monthly basis, and we have less than 1% of our total Amazon bill is for data charges. But if you have a use case where you have really, really heavy write out of your environment, yeah, you definitely want to consider what would be your egress charges. And if you’re using things like Direct Connect, they probably give you our Dedicated Interconnect or ExpressRoute, depending on what cloud you use. They give you really the best pricing for egress charges.

So that definitely is a cost to consider in this case. But in the grand scheme of makes like how much you save from the efficiencies you get plus repurposing your DR and whatnot, I think for IT case that’s negligible cost. And for our data numbers, not a big issue. But yeah, definitely something should be accounted for, for heavy-write environments.

Q: When storage workloads are migrated to Cloud Volumes/Cloud Volumes ONTAP, what happens to the compute uses? Do they need to be migrated to Cloud, too?

Kamal: Yes. So essentially what we will do is, with NPS or Cloud Volumes ONTAP, you could get the data replicated to the cloud. Depending on how you use compute, the migration can be as simple. If you are truly a software-defined organization where all your configurations and binaries and whatnot are a code, you could just build up, bring the containers up on the right side in Kubernetes or VM up on the right side and load those parameters using a software-defined tool like Ansible. Or if you have really hard dependency on the OS, there are multiple tools out there to migrate your VM from a Cloud VMware to EC2, for example. So that is something else we figured out based on what kind of use case you are running.

For our purposes, we have been very deliberate about saying everything we defined for a VM, our compute has to be a software-defined entity. We really don’t want to depend on OS-level or hypervisor-level dependencies and features so it’s really hard to do cloud migration. So for us, it just has purpose of building the new vanilla flavor of container or VM on the right side and putting all the colors using Ansible or let’s say Helm in case of communities, and connecting it to the storage, which is already replicated using NetApp technology for migrations.

Key Takeaways

Kamal: Definitely as we said, the whole idea of this NPS gives you best of both worlds. It lets you use cloud providers, cloud compute elasticity, while you can scale up and down, use any cloud providers on demand, the features you want, but at the same time maintain full control of your data. So the idea is you can have security, data privacy, compliancy, audits, all of those things you have been doing in your on-prem data center now can apply to cloud workloads too. So those are big, big selling points for NPS. And especially if you are in a regulated company, it resonates really well with them. NetApp, as we said, has the best data mover capability in the industry. SnapMirror, with our semi-sync or multi-sync, whatever you want to use your technology to manage, move, and protect your data across different clouds. So definitely NPS.

Along with that is truly your data fabric connecting to your multi-hybrid cloud environments and use cases you can deliver on top of that when it comes to cloud burst. Like you just use it extra capacity of the cloud or cloud migrations or DR, the possibilities are endless. So that definitely is a key takeaway.

And security. We talked about software-defined features like SVM, end encryption, at-rest encryption, and transit, all of those are really Enterprise-grade, and most workloads, which really are not willing to move to the cloud for those kind of reasons, now can take advantage of those. Bring your storage to the cloud. Have all these features turned on at the same time. Have it through OpEx model from the compute where you can scale up and scale down as needed and be more cost efficient in your environments.

Definitely a lot of value this kind of foundation bring to our cloud adoption journey and we are really heavily investing more and more into this norm.

Thank you for reading. Click here for the recording of this webinar.